The Exploring Mind

A Description—Experience Framework of the Psychology of Risk

How Experimental Methods Shape Views on Human Rationality and Competence

How Attentional Biases in Decisions From Experience Translate Into Nonlinear Probability Weighting

Deliberate Ignorance in Societal Transformations

Many normative and descriptive theories of human choice are silent on how people search for and learn from novel information. This reticence seems to suggest that considering how people explore and update their knowledge of the world contributes little to comprehending how they cope with uncertainty when making decisions. We disagree. Facing the ocean of uncertainty, the human mind cannot help but explore: visually searching for targets of interest, searching its semantic memory, or looking up external information in the world’s information ecology. These processes, of course, come with their own perils as, for instance, the COVID-19 infodemic has demonstrated, only highlighting how important understanding them is. Unless decision scientists understand cognition as processes of exploration and learning, they will fail to understand key aspects of the boundedly rational mind. This has become increasingly clear after the discovery of the description–experience gap, that is, the finding that choice can systematically diverge depending on whether people actively search for and experience information, thereby reducing uncertainty, or are being presented with a symbolic representation of all information in one go. We have been exploring some of the description–experience gap’s far-reaching implications, as well as the deliberate refusal to update one’s knowledge (i.e., deliberate ignorance), another fascinating facet of the exploring mind.

A Description–Experience Framework of the Psychology of Risk

The modern world holds countless risks for humanity, both large-scale and intimately personal—from cyberwarfare and climate change to sexually transmitted diseases. The success of countermeasures—so we argued (Hertwig & Wulff, 2022)—depends crucially on how individuals learn about those risks. There are at least two powerful but imperfect teachers of risk. First, people learn by consulting descriptive material, such as warnings, statistics, graphs, and images. More often than not, however, a risk’s fluidity defies precise portrayals. Second, people may learn about risks through personal experience. For instance, at the start of the COVID-19 pandemic, personal experience with the virus was extremely rare, but it has since become common. This matters greatly. Responses to risk can differ systematically depending on whether people learn through one mode, both, or neither. One reason—but by no means the only one—for these differences is the distinct learning dynamics of rare events (typically the risk event) and common events (typically the risk’s nonoccurrence) and their resulting impact on a person.

Building on the description–experience gap, we proposed a new framework of the psychology of risk. Its core is a fourfold pattern of epistemic states (Figure 1). When people face a risky decision, four states of knowledge arise, depending on whether one encounters risks through one learning mode, both, or neither. Drawing on extant behavioral evidence, one can now derive for each of these states predictions as to how they shape behaviors. For example, when only descriptive information, such as a fact box, is available (Figure 1, top left), one tends to overweight rare side effects of a vaccine and, ceteris paribus, be more inclined to decide against vaccination. Conversely, when information is gleaned from personal experience (bottom right), one tends to underweight rare harms because of lack of firsthand experience. Consequently, one is more inclined to choose vaccination. This fourfold pattern has numerous implications for understanding otherwise puzzling phenomena such as when and why experts and laypeople entertain very different perceptions of risks or when and why descriptive risk warnings frequently fail to deliver their intended effect. It also suggests new methods for risk communication (see the Boosted Mind section and simulated experience interventions).

Figure 1. A fourfold pattern of epistemic states in the context of the measles, mumps, and rubella (MMR) vaccine, depending on the presence versus absence of descriptive and experiential information.

Adapted from Hertwig and Wulff (2022)

Original figure licensed under CC BY 4.0

Key Reference

How Experimental Methods Shape Views on Human Rationality and Competence

The heuristics-and-biases program advanced by Tversky and Kahneman in the 1970s has produced an extensive catalog of how people’s judgments systematically deviate from norms of rational reasoning. Their findings have led to the conclusion that people are largely incapable of sound statistical reasoning and decision making. But previously the intuitive statistician program had reached a starkly different conclusion. This program found substantial correspondence between people’s judgments and rules from probability theory and statistics. Indeed, a 1967 review concluded that people could be correctly understood as intuitive statisticians who can make reasonably accurate inferences about risks and uncertainties. How could psychology change its conclusions about people’s mental competence so dramatically? After all, people’s statistical intuitions did not suddenly go astray.

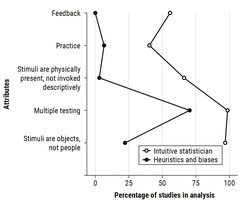

To tackle this question, we (Lejarraga & Hertwig, 2021) examined the methods of more than 600 experiments from both lines of research. We found that Tversky and Kahneman established a new experimental protocol to measure statistical intuitions. As Figure 2 shows, before their arrival, researchers used an experiential protocol that allowed people to learn probabilities from direct experience. Usually, people could practice, sample information sequentially, and adjust responses with feedback. Tversky and Kahneman’s experiments replaced this with a descriptive protocol, commonly text-based scenarios that tended to ask for few or even one-off judgments. People had little opportunity to practice or learn from feedback.

Figure 2. Classification of experimental protocols according to five attributes.

Here’s the rub: The experimental methods researchers use are not neutral tools. They can shape people’s judgments. People do not seem to be very good at solving word problems about probabilities—at least not without explicit instructions. But they do seem to be reasonably good intuitive statisticians when given the opportunity to learn through direct experience. Since the 1970s, decision researchers have relied mostly on descriptive protocols. This uniformity in the field’s experimental culture has contributed, in our view, to the field's lopsided view of people as irrational beings.

Figure: American Psychological Association

Adapted from Lejarraga and Hertwig (2021)

Key Reference

How Attentional Biases in Decisions From Experience Translate Into Nonlinear Probability Weighting

Only with a model of the underlying cognitive processes will researchers be able to understand how learning influences people’s weighting of probabilities when making decisions under risk. For a long time, however, researchers have mainly used psychoeconomic functions, devoid of process assumptions, to account for deviations between choice behaviors and their normative benchmarks. Confronting this challenge, we (Zilker, 2022; Zilker & Pachur, 2022) investigated how deviations from normative predictions can result from imbalances in attention allocation. We did so by linking two influential frameworks: cumulative prospect theory (CPT) and the attentional drift diffusion model (aDDM). CPT describes the impact of risky outcomes on preferences in terms of nonlinear weighting of probabilities. The aDDM formalizes the finding that attentional biases toward an option can shape preferences within a sequential sampling process.

Using the aDDM, we simulated choices between two choice options while varying the strength of attentional biases toward either option and then modeled the resulting choices with CPT. Changes in preference due to attentional biases in the aDDM were reflected in the parameters of CPT’s weighting function. A reanalysis of behavioral data on decisions from experience then found that people’s attentional biases are also linked to patterns in probability weighting, as suggested by the simulation results (Figure 3).

Figure: American Psychological Association

Adapted from Zilker and Pachur (2022)

By revealing that nonlinear probability weighting can arise from unequal attention allocation during a sequential sampling process, these analyses shed light on how cognitive processing can give rise to and shape psychoeconomic functions. Last but not least, let us emphasize that attentional biases need not be irrational. Zilker (2022) showed that attentional biases can increase the reward rate (the amount of reward obtained per unit of time invested in the choice)—even if they are detrimental to accuracy (the tendency to choose the highest value option).

Key References

Deliberate Ignorance in Societal Transformations

When the government of reunified Germany opened up the Stasi files—highly sensitive information on millions of individuals gathered by the East German secret service—it expected that everyone would want to access theirs. Viewing their file would allow people, so the official logic went, to reclaim the life that had been stolen from them. Yet decades later, not even half of the 5.25 million people estimated to believe that the Stasi had kept a file on them have applied to read their file.

We (Hertwig & Ellerbrock, 2022; see also Hertwig & Engel, 2020) combined survey methods with life-history interviews to identify and describe the reasons people invoke for deliberately ignoring their Stasi file. By far the most prevalent reason people gave for not viewing their file was that the contents were not “relevant.” This lack of relevance, however, by no means suggests that the people no longer cared. In contrast, as revealed by the interviews, it means that they felt no amount of knowledge could undo actions in the past (e.g., a person spying on and betraying another person). Another frequent reason people gave was they worried that they would discover that their loved ones or friends had worked as informants; they wanted to avoid the anguish they would feel if their fears were confirmed. A number of respondents questioned the trustworthiness of the Stasi files. Finally, for some people, refusing to read the file was an act of “political opposition,” either because they disapproved of Germany’s reunification or because they remembered positive aspects of East German life and warned against conflating the Stasi with East Germany as a whole.

Taken together, these reasons paint a nuanced picture of individual choices not to know in political and social contexts that transform over time. Many of these reasons are rooted in a desire for harmony and cooperation, even while institutional and collective memory politics emphasize the importance of transparency and justice. The exploring mind is an adaptive one with different priorities and strategies, depending on historical contexts and individual circumstances (see more on this topic in the print magazine accompanying this report). It can also decide, for good reason, to deliberately not explore.

Key Reference