The Boosted Mind

Why Policy Makers Are Tempted to Nudge

Boosting Digitalization and Democracy

Digital Media and Democracy: A Systematic Review of Evidence

Resolving Content Moderation Dilemmas Between Free Speech and Harmful Misinformation: A Conjoint Experiment

Digital Challenges and Cognitive Tools for the Digital Age

Communicating Our Research to Policy Makers and the Public

Using Simple AI to Improve Diagnosticians’ Performance

New Avenues in Risk Communication: Simulated-Experience and Descriptive Formats

Today’s citizens encounter highly engineered choice environments in almost all areas of life—in supermarkets and shopping centers, on social media and investment sites, and even at the ballot box. It would be overly optimistic to assume that the designers of these environments—the choice architects—typically act benevolently and with a focus on public welfare. More often, they pursue commercial or other self-serving interests. This systemic divergence between public welfare and commercial interests can lead to “toxic” choice environments—for example, food environments that are “obesogenic” and “diabetogenic”—and to online environments designed to hijack users’ limited attention by means of distraction and manipulation. There is no silver bullet to address the threat that such engineered, non-benevolent choice environments pose to our political, economic, health, and social systems. Many current social and global problems require more than one kind of approach. We suggest, however, that the empowerment of citizens is a key ingredient of any attempt to redress the balance between toxic choice architectures and individual autonomy—and that the behavioral sciences, and especially psychology, have a pivotal role to play here.

Here we report on our work on boosting people’s competences to deal with today’s challenges, particularly in the online and health domains. Our contributions range from theoretical–analytical to empirical and translational work. The latter aims to inform the public and public policy experts about what behavioral science has to offer.

Why Policy Makers Are Tempted to Nudge

Two frameworks of behavior change informed by behavioral science are nudging and boosting. For the former, policy makers use citizens’ cognitive and motivational frailties, in combination with a smartly designed choice architecture, to get (“nudge”) citizens to act in their own best interests (e.g., redesigning a cafeteria in a way that leads “inert” people to consume less calorie-dense food). “Boosting,” in contrast, aims to empower people to make better decisions that are in their own interest by fostering agency and existing competencies or building new ones.

The nudging approach has been criticized for ethical drawbacks including concerns about undermining individual autonomy and a potentially paternalistic premise (i.e., the policy maker makes unfounded assumptions about all or at least most individuals’ preferences). Yet, nudging has gained the status of a much-promoted, adopted, and even hyped approach to behavioral public policy. Why? To find out, we (Hertwig & Ryall, 2020) assumed a model of dynamic policy making in which policy makers can choose to implement either a nudge or a boost intervention to address a public health problem. One novelty of the chosen setup is that the boost the policy maker can implement would equip the individual with the competence to overcome a nudge-enabling cognitive bias once and for all. This creates a strategic and, perhaps, ethical conflict for policy makers. With mathematical and game-theoretic analyses, we identified conditions under which the policy maker's preferences are not aligned with those of the individual. Specifically, policy makers have a strategic interest in choosing not to boost in order to preserve the option of using the nudge (and its associated bias or weakness) in the future—even though boosting is in the immediate best interests of both the policy maker and the individual. This analysis shows, first, that the dynamic of public policy can be analyzed analytically and, second, that policy makers, cognizant of the nudging intervention’s long-term “option value,” may be inclined to favor soft paternalism over empowerment of citizens.

Key Reference

Boosting Digitalization and Democracy

The digital world is playing a larger and larger role in our daily lives. However, it brings with it a variety of challenges and risks, such as manipulative choice architectures, increased hostility online, and the spread of harmful misinformation. Inspired by the ecological and adaptive rationality perspective, we aim both to develop an evidence-based understanding of structures and challenges specific to these new digital environments and to present a roadmap for tackling these challenges. In our research, we took a multipronged approach to this topic, including a systematic review of evidence of the impact of digital media on democracy; surveys and experiments examining people’s preferences and attitudes to, for instance, content moderation dilemmas; and conceptual reviews and experimental approaches to boosting people’s digital competences.

Digital Media and Democracy: A Systematic Review of Evidence

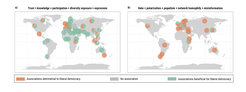

One of the most contentious questions of our time is whether the rapid global uptake of digital media has contributed to a decline in democracy. We (Lorenz-Spreen et al., 2023) conducted a systematic review of studies investigating whether and how digital media impacts citizens’ political behavior. To this end, we synthesized causal and correlational evidence from nearly 500 articles on the relationship between digital media and democracy worldwide. The findings show that social media has a significant impact around the world, but that the effects are intricate and depend on the political context (e.g., established versus emerging democracies). The results also highlight that digital media is a double-edged sword, with both beneficial and detrimental effects on democracy (Figure 1). The positive effects of digital media, such as increased political participation and knowledge, are more notable in countries with emerging democracies, such as those in South America, Africa, and Asia. In contrast, digital media has been linked to an increase in populism, polarization, and a decrease in trust in institutions and the political system, particularly in established democracies such as those in Europe and the United States.

Adapted from Lorenz-Spreen et al. (2023)

Original figure licensed under CC BY 4.0

Key Reference

Resolving Content Moderation Dilemmas Between Free Speech and Harmful Misinformation: A Conjoint Experiment

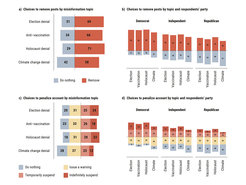

Content moderation of online speech is a moral minefield, especially when two key values come into conflict: upholding freedom of expression and preventing harm caused by misinformation. Currently, moderation decisions are made without any knowledge of how people trade off these two values. However, robust and transparent rules that take into account people's views and trade-offs are needed to handle this conflict in a principled and evidence-informed way, eventually fostering public support of such rules. We (Kozyreva et al., 2023) examined such moral dilemmas and trade-offs in a conjoint survey experiment where United States respondents were asked to decide whether to take action against various instances of misinformation. The results showed that despite notable differences between political parties, the majority of United States citizens preferred stopping harmful misinformation to protecting free speech (Figure 2). Furthermore, people seemed to agree on which features of the content to take into account when deciding whether to remove posts or suspend accounts. People’s willingness to accept such interventionist measures depended on the type of content and increased with the severity of the consequences of misinformation and the sharer’s past behavior (i.e., did the account previously post misinformation?) In contrast, features of the account itself, such as the person behind the account, their partisanship, and their number of followers, had little to no effect. These findings can inform transparent and legitimate rules for content moderation that meet public support.

Adapted from Kozyreva et al. (2023)

Original figure licensed under CC BY 4.0

Key Reference

Digital Challenges and Cognitive Tools for the Digital Age

One of ARC’s long-term goals is to develop a tool kit of interventions that empower internet users to reclaim some control over their digital environments by boosting their digital literacy competency and their cognitive resistance to manipulation. To this end, we (Kozyreva et al., 2020) developed a conceptual map of behavioral and cognitive interventions that aim to counteract key digital challenges (Figure 3). After outlining the main challenges that digital environments pose to human autonomy and decision making, we identified two types of digital boosting tools: (1) those aimed at enhancing people’s agency in their digital environments (e.g., self-nudging, deliberate ignorance) and (2) those aimed at boosting competencies of reasoning and resilience to manipulation (e.g., simple decision aids such as lateral reading, inoculation). Following up on this map, we (Kozyreva et al., 2022) further developed one particular class of boosting interventions. Specifically, we argued that digital information literacy must include the competency of critical ignoring—smartly choosing what to ignore and where to invest one’s limited attentional capacities.

Aiming to address the challenge of highly personalized political advertisement, that is, the microtargeting of potential voters, we (Lorenz-Spreen et al., 2021) tested an intervention aimed at boosting people’s ability to detect such microtargeting. Two online experiments demonstrated that a short, simple intervention markedly boosted participants’ ability to accurately identify ads that were targeted at them by up to 26 percentage points. The intervention merely prompted participants to explicitly reflect on one of their major and microtargeted personality attributes by completing a short personality questionnaire. The ability to detect the personality-trait-based microtargeting increased even without personalized feedback. Yet, just providing a description of the targeted personality dimension failed to improve individuals’ detection ability.

Finally, in cooperation with an international group of 26 misinformation researchers, we developed an expert-curated research resource of ten types of evidence-supported interventions as a guide to mitigating misinformation (visit the website Toolbox of Interventions Against Online Misinformation and Manipulation).

Figure 3. Map of challenges and boosts in the digital world.

Adapted from Kozyreva et al. (2020)

Original figure licensed under CC BY 4.0

Key References

Communicating Our Research to Policy Makers and the Public

Both citizens and policy makers need reliable knowledge to understand and successfully address key challenges that our societies are facing today. In our work on boosting, digitalization, and democracy, we are therefore also committed to building public-facing resources (e.g., the scienceofboosting.org website) and contributing to the interface between science and policy making. To this end, researchers from our group, together with other global experts and the Joint Research Center of the European Commission, co-authored the technology and democracy report (Lewandowsky et al., 2020) to further the understanding of the influence of online technologies on political behavior and decision making. We were also contributors to the statement “Digitalisierung und Demokratie” (2021) published by the Leopoldina, Germany’s National Academy of Sciences, and to the report on Science Education in an Age of Misinformation (2022) by Stanford University.

Effective science communication is challenging when scientific messages need to take into account a dynamic and changing evidence base and when this communication is in competition with waves of misinformation (see the recent COVID-19 pandemic as a prime example). To help address this challenge, we (Holford et al., 2022) proposed that a new model of science communication is necessary—a collective intelligence and collaborative approach, supported by technology. This approach would promise multiple benefits compared to the traditional model of individual scientists communicating their findings (i.e., the “lone wolf” model of science communication). It would facilitate (a) aggregating knowledge from a wider scientific base, (b) contributions from diverse scientific communities, (c) incorporating stakeholder inputs, and (d) faster adaptation to changes in the knowledge base. Such a model could help combat misinformation and ensure that scientific communication remains informed by the latest evidence. We laid out what science communication as collective intelligence would look like: It should communicate the strength of the evidence, be forthcoming about uncertainty and error involved in the evidence, be diverse, be open to alternative perspectives, be transparent, build trust, be motivated by the common good, and be easy to understand. Showcasing recent examples of collective intelligence in the science communication domain, we demonstrated how some of those principles can be put into action.

Key References

Boosting Health and Medicine

Today, the world is grappling with many urgent and costly public health issues. Examples abound—from the devastating number of fatalities resulting from medical misdiagnosis, to the long-term prescription of strong opioids, to skepticism of and hesitancy to accept potentially life-saving vaccinations. These issues must be addressed to ensure health and well-being around the globe. Here we report on research that again is committed to the goal of boosting citizens’ and experts’ competencies, thus empowering them to make good decisions.

Using Simple AI to Improve Diagnosticians’ Performance

There is much hope, but also hype, for using artificial intelligence (AI) systems to improve medical diagnostics and thus reduce avoidable diagnostic errors. However, AI systems are usually not permitted to make automated decisions in high-stakes settings, such as those involving consequential decisions in medicine. Issues of concern range from transparency to accountability to bias. Instead, human decision-makers consult AI advice before making their own final decision (e.g., medical experts may consult a radiology AI system during their diagnostic process). However, since many such AI systems are black boxes that need to be “explained” after the fact, their designers face challenges in establishing trust with domain experts (e.g., medical diagnosticians) and other stakeholders (e.g., patients). We (Keller et al., 2020) argued that the research and practitioner communities should focus more on developing and implementing inherently interpretable, simple AI systems (e.g., simple decision trees). Such models front-load the computational effort to then arrive at a simple decision aid that can be very easily implemented (e.g., as a laminated pocket card for physicians). They augment experts’ decision competence rather than replacing it. We (Keller et al., 2020) presented a case study in the context of the task of postoperative risk stratification in intensive care (i.e., deciding which patients need to be closely monitored after an elective surgery). Our results show that very simple, transparent, and understandable decision trees can achieve a level of performance almost as good as state-of-the-art, black-box machine learning models. Assuming that it is the joint performance of the physician and the AI system that matters (and not how well a system performs on its own), the question remains to what extent any advantage of a more complex, opaque model will be thwarted by possibly lower compliance of experts.

Key Reference

New Avenues in Risk Communication: Simulated-Experience and Descriptive Formats

When educating people about risks, we usually resort to describing the risks and their likelihood of occurring (e.g., the side effects of drugs described in drug leaflets). However, in the absence of such described risks, people merely experience risks or their absence (e.g., a patient takes a drug and either experiences a side effect or does not, or a physician prescribes a drug and experiences a certain number of patients later complaining about a side effect or does not). As has frequently been found (see the section The Exploring Mind), people react very differently to described versus experienced risks. Intriguingly, this description–experience gap points to a new avenue for risk communication, namely, letting people experience (simulated) risks rather than just simple, static numbers.

We (Wegwarth et al., 2022) explored the potential of risk communication using simulated experience in the domain of strong opioids. Chronic pain affects about 20% of adults globally and is a major cause of decreased quality of life and disability. Evidence shows that even short-term use of strong opioids is associated with small improvements in pain and function relative to a placebo, no improvement relative to non-opioid medication, and increased risk of harm. Guidelines caution against long-term use. Notwithstanding these clinical recommendations, opioids have been increasingly used for the management of chronic pain in the United States and Germany. Currently 80% of long-term prescriptions of strong opioids are for chronic noncancer pain. This suggests a miscalibration of risk perceptions and behavior among those who prescribe, dispense, and take opioids. Can a profoundly different way of communicating risk change this? We ran a randomized clinical control trial to examine the effects of experience and description on risk perception and risk behavior in 300 German patients who took opioids to manage chronic noncancer pain. We investigated the effects of either simulated experience or description (Figure 4) on (a) objective risk perception, (b) subjective risk perception, and (c) risk behavior (i.e., continued intake of strong opioids). Patients’ risk perception improved in both formats; even those who saw the description (“fact box”) estimated some outcomes more accurately than with experience. A notable difference, however, emerged in behavior. Those who experienced the simulation were more likely to reduce and terminate their opioid intake and were more likely to adopt other therapies. These results suggest that simulated experience may suggest a qualitatively different way of communicating risks, one that may have a greater potential to change behavior. We are currently conducting more studies to explore this possibility.

Figure: Elsevier

Adapted from Wegwarth et al. (2022)

Key Reference

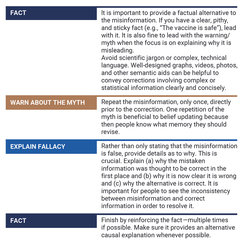

Empowering Citizens to Effectively Communicate About COVID-19 Vaccines and Protect Themselves and Others Against Misinformation and Manipulation

The COVID-19 pandemic has highlighted a global health challenge: hesitancy to receive potentially life-saving vaccinations. Despite extensive research into how to communicate effectively and refute misinformation about vaccines and other controversial topics (e.g., climate change), knowledge and best practices have yet to be widely adopted. To bridge this gap, we (Lewandowsky et al., 2020) created a concise handbook outlining the current best practices for communicating about COVID-19 vaccines as well as how to effectively refute false information. The handbook is targeted at a broad audience—including journalists, policy makers, researchers, doctors, nurses, teachers, students, and parents—and was designed to boost their competence to communicate effectively on vaccines. It covers common misconceptions about vaccines and explores the psychological, social, and political factors that influence people’s decisions about whether to get vaccinated. The handbook also provides concrete strategies for effective communication about the benefits of getting vaccinated and for challenging misinformation about the vaccines. For example, the reader learns how to counter false information by using the “debunking sandwich” (Figure 5) and how to inoculate people against common misinformation tactics (“prebunking”). By empowering citizens with the skills and knowledge to effectively communicate about COVID-19 vaccines and protect themselves and others against misinformation and manipulation, the handbook can help reduce the pervasive vaccine hesitancy that we see globally.

Figure 5. Structure of an effective debunking strategy (“fact sandwich”). From Lewandowsky et al. (2021). The COVID-19 Vaccine Communication Handbook & Wiki.

Adapted from Lewandowsky et al. (2021)

Key Reference