Cooperative Artificial Intelligence

Sample Project: Social Preferences Towards Machines and Humans (Ongoing)

Sample Project: MyGoodness (Ongoing)

What mechanisms facilitate successful human–machine cooperation and machine-mediated human cooperation?

Zero-sum interactions like board and computer games have attracted much interest from artificial intelligence (AI) research as AI surpassed humans’ performance. Yet, much of human sociality consists of non-zero-sum interactions that involve cooperation and coordination. Throughout its evolutionary past, the success of the human species has largely depended on its unique cooperation abilities. Introducing AI agents as cooperation partners to social life bears immense potential but also presents the challenge of equipping AI systems with compatible capabilities to cooperate with humans. Such optimistic views go back to early thinkers like Norbert Wiener, who envisioned a symbiosis between humans and machines.

Thus, this research area studies cooperative human–machine interactions—or, in short, cooperative AI.

Developing and measuring key cooperative AI concepts relies on machine behavior research. Indeed, recent behavioral studies show that dynamic reinforcement learning algorithms can establish and sustain cooperation with humans across various economic games. Across various disciplines, such as behavioral economics, human–computer interaction, and psychology, interest in settings where people and machines can cooperate is growing. A recent review counts more than 160 behavioral studies. However, when taking a closer look at these studies, a fundamental disagreement about a key methodological feature becomes apparent: How to implement the payoffs for the machine?

Sample Project: Social Preferences Towards Machines and Humans (Ongoing)

People’s willingness to cooperate with machines strongly depends on the way in which the payoffs for the machine are implemented.

Interest across disciplines in cooperative AI settings is growing. By now, more than 160 studies from various disciplines have studied how people cooperate with machines in behavioral experiments. Our systematic review of the instructions of these studies reveals that the implementation of the machine payoffs and the information participants receive about them differs drastically across these studies (March, 2021, Strategic interactions between humans and artificial intelligence: Lessons from experiments with computer players). Nevertheless, how a machine is represented likely shapes humans’ social preferences towards machines. To highlight just a few extremes: Some studies use a so-called “token player” who collects the machines’ payoffs. In other studies, participants either receive no information regarding who earns the machines’ payoffs, or they learn that these are not paid out at all.

In the Social Preferences Towards Machines and Humans project, we ran an incentivized online experiment in which we compared how the implementation of machine payoffs changes humans’ cooperation with machine partners (see Figure 1 for a complete overview).

Figure 1. Overview of the different between-subject treatments.

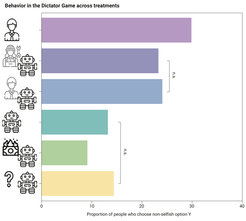

The results suggest it makes a big difference. For instance, in the simple Dictator Game, people must decide how much money to send to their machine partners. Here, implementing a token player earning the payoff almost doubles people’s willingness to share, compared to no information (see Figure 2). In general, when matched with machine partners, people reveal substantially higher social preferences when they know that human beneficiaries receive the machine payoffs compared to when they know that no such “human behind the machine” exists. Not informing people about the machine payoffs leads to low social preferences, as people form beliefs about the payoffs in a self-favoring way. Our results thus indicate that the degree to which humans cooperate with machines depends on the implementation and information about the machine's earnings.

Figure 2. Overview of the proportion of participants who share with their counterparts in the Dictator Game across treatments, all pairwise comparisons are significant (t-test with Tukey correction) except for those marked with a bracket in the figure.

Key References

Sample Project: MyGoodness (Ongoing)

This project explores what factors influence human charitable giving.

In the United States alone there are over 1.5 million registered charities, receiving half a trillion dollars annually. The scale and relevance of charitable giving has provoked great academic interest. However, the empirical insights remain somewhat fragmented: Charitable giving is commonly studied in small, homogeneous samples drawn from laboratories or online platforms, or large field samples with limited variation in treatment. These study characteristics limit the ability to understand how relevant contextual factors interact, and to identify cross-cultural variation in the main (average) effects driving charitable giving. Here, we present findings from an online game we created that enabled us to simultaneously investigate a vast array of factors that influence charitable giving—e.g., how many people profit from it (“effectiveness”), the identifiable victim effect, recipient demographic influences, and deliberate ignorance of donation decision aspects. This is akin to running an experiment with tens of thousands of conditions. Over 280,000 people from 200 countries and territories participated in the game, generating over 3 million decisions, some incentivized. In addition to replicating some findings from the relevant literature (e.g. identifiable victim effect, preference for younger people), our results reveal two key insights. First, we find that, above certain thresholds—helping three or six strangers on average—the charity’s effectiveness plays the dominant role, across cultures, in driving donation decisions, overshadowing all other effects tested, including a preference for giving funds to oneself or family. Second, we find that we identify heterogeneity in the effect size of different experimental factors, and assess which further experimental factors drive this. For example, when studying the identifiable victim effect, naming a “victim” results in lesser charitability in 26% of conditions, mainly those involving older recipients. This suggests that while many main effects have statistical significance, their relative practical significance may not be substantial or may be highly sensitive to other factors—often completely reversing direction. In summary, our findings paint a more detailed picture of human prosociality, and highlight the importance of large-scale, multi-factor experimentation in providing a more comprehensive picture of human prosocial behavior.

Figure 3. A participant based in western Europe faces a dilemma of how to spend $100. They can either receive $98 themselves, or use the funds to provide nutritious meals to ten male children located in eastern Europe.

Key Reference

Awad, E., Rahwan, I., Yoeli, E., Patil, I., Rahwan, Z. (2023). Mapping charitable giving preferences from 3 million decisions across the world. (in preparation).